Introduction

Most games require physics. Every character you push around the world, every bullet you fire, every mutant beast you follow probably has some physical simulation underpinning it. Now let’s say that your game is big, like, incredibly big. Deserts, mountains, huge continents… that sort of big. How are you going to make sure that the laser toting dinosaurs at the edge of the world work as well as those at the very center? Maybe you’ve never even considered that they wouldn’t Just Work. it’s all down to how machines represent both large and small values (such as distance), and most resources want you to know all about that representation before letting you know the cool tricks. This post won’t delve too deeply and will teach you one really useful trick to keep safe for a rainy day.

What was that about a dinosaur?

Games rely on a representation of real numbers that contains error. This is an accepted fact and for a multitude of reasons including dedicated hardware support, flexibility and wide adoption the standard most often adhered to is IEEE 754. This standard provides “single point” and “double point” representations, each corresponding to the datatypes (in C++) float (32 bit) and double (64 bit).The standard was instantiated in 1985, then expanded in 2008, both Wikipedia pages good for this knowledge.

Until quite recently most games used single point precision, now we’re at a point of transition where modern hardware often provides 64 bit representations as it did for the 32 bit FPU in previous generations. There are still extra overheads to consider however, so not everyone is making the jump. In fact the same considerations as you would give to numerical representation (e.g. short, int, long) are actually the same you want to consider here.

Core to the workings of these “floating point” (the generalised term – and hence the naming of float) values is the idea that values represented at scale are only accurate to within some bounds of that scale. As an example if you’re measuring the distance to the Sun in km, you’re probably not too bothered if it’s accurate to within a few cm. If however you’re measuring the head of a pin, that precision is suddenly really important. Floating point values are great at this representation, but try and represent the distance to a pinhead on the Sun and you’ve got problems.

In our example above, a laser toting dinosaur requires the same level of physics stability at a large distance as it does closer up. It’s this absolute level of precision that can send dinosaurs in the distance flying into the air, or falling through the world.

What About Other Systems?

Physics is an easy target to pick because instabilities in the simulation are so easy to see. Flying vehicles, ragdolls that continually twitch on the floor and all those lovely bugs. Numerical instability is often the case for these artifacts and floating point error is one contibutor to numerical stability. The same can be said for animation (maybe a soldier’s gun doesn’t always sit perfectly in his hand), gameplay (you stood on that tiny remote switch but it didn’t register), rendering (you see an invisible seam between two walls)… in fact most game systems can suffer, so it’s a good bit of knowledge to have to hand.

Maximum Error

This is as close to maths as the article is going to get. There’s a pretty simple way to work out the worst case error at any scale but you have to learn a distinction.

Absolute Error is the actual, observable error arising from some calculation. Let’s say you recorded your height at 180 cm, but the actual height was 180.22cm, your absolute error is 0.22cm.

Relative Error is the same error, but is relative to the measurement and takes the scale of the value being approximated into account. It’s represented as:

Relative Error = Absolute Error / Perfect Measurement

e.g. 0.0012 = 0.22 / 180.22

For every floating point calculation you make, you could choose to measure the error and see this for yourself. What’s particularly interesting though is that for normalised floating point representation the relative error is constant. There’s a particular phrasing for it relating to the half step (or gap) between neighbouring representations, another way of looking at it is the largest number added to 1.0 that still represents 1.0. Either way the Machine Epsilon is defined for floating point types and always bounds the worst case error.

Altering the above equation to read:

Absolute Error = Relative Error * Perfect Measurement

You can substitute in the value for Epsilon as relative error, your expected value and get a worst case absolute back…

Putting it together

C++ has a handy template specialisation for most types. It’s the recommended way to pull out proper limits as they wrap the C library macro constants and give you a unified way to write:

#include <limits>

using namespace std;

// 3.40282e+38

const float fMax = numeric_limits<float>::max;

// 1.175494e-38

const float fMin = numeric_limits<float>::min;

// 1.192092896e-7

const float fMin = numeric_limits<float>::epsilon;

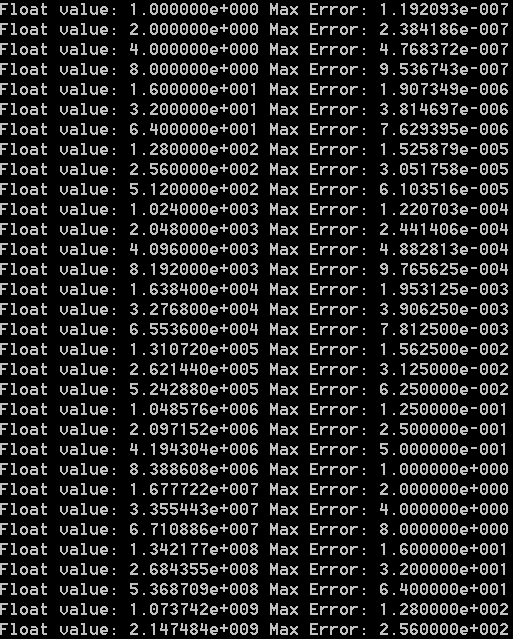

I put some examples of this error calculation up on GitHub, it’s not the greatest bit of code I’ve ever written but helped me to visualise things a little. Some snippets:

In particular check out how the larger values (2,000,000,000 has an error in the range 250). Though these values are abstract, in reality you’ll be assigning (probably metric) values to the units. For example…

The average distance to the Sun is 149,597,887.5 km. If one unit = 1km a single kilometer as represented by a float would be 1.0f.

Applying the above : 1.495979e8 has a max error of 1.783346e1 or around 18km! That’s pretty precise but it feels like it isn’t because of the scales involved.

Back to the Beginning – Picking a world

Most physics middleware has the concept of a ‘world’, it’s usually a box of some defined dimensions that all the physics simulates inside. The box usually has some default dimensions that you know will just work, it also usually allows you to override the values.

Generally that approach isn’t too popular – you’ll more likely want to try and recalculate the origin offset for the physics world and apply smaller values to give the impression of larger worlds. Sometimes though, you would benefit from stealing just a few hundred/ thousand meters of simulation more and it’s in those cases that you apply the above.

Now you know how to calculate absolute worst case errors for different world sizes all you really need to know is what sort of fidelity your Physics engine will cope with. Make sure that the worst error is never breaking that contract and you should be good to go.

Remember also, though the standard is to work in meters there’s nothing wrong with altering that to give more or less precision at the lower end. Physics engines commonly support this and popular game engines often take advantage.

Finito

It’s worth mentioning again that this is a demonstration of worst case error. You’ll more likely have lesser errors, but if your artists are producing awesome new vehicles, or your level designers are constructing near the world limits the possibility of a worse error always exists and in my experience it’s better to prepare for that, rather than finding out the horrible way two weeks before ship date.

It’s always worth checking out other representations also, doubles (double precision floating point) have a larger range and can represent values more accurately. Fixed Point is useful for network transmission and I do occasionally hear of instances where people are choosing their own representations.

These Guys know their stuff

People who’ve helped to form the above:

Actual Books

- Essential Mathematics For Games & Interactive Applications by James M. Van Verth and Lars M. Bishop has an incredible chapter on fixed and floating point representation.

- Real Time Collision Detection by Christer Ericson has an excellent section on floating point.

Blogs and Sites

- Fantastic float ‘converter’ tool – great for scratchpad visualisation

- This Gamasutra article is one of the best non-maths articles on floating point I’ve ever read. My GitHub source includes some of that sites cited calculations as part of the error demonstrations.

- Glen Fielder’s blog, always a fascinating read.